Florian et al. published their recent work on the emergence of symbols for state estimation in supervised autonomy scenarios. In this way, the robot can even recognize and remember unknown symbols such as error states.

The paper was publisehd in the Journal of Advanced Robotics.

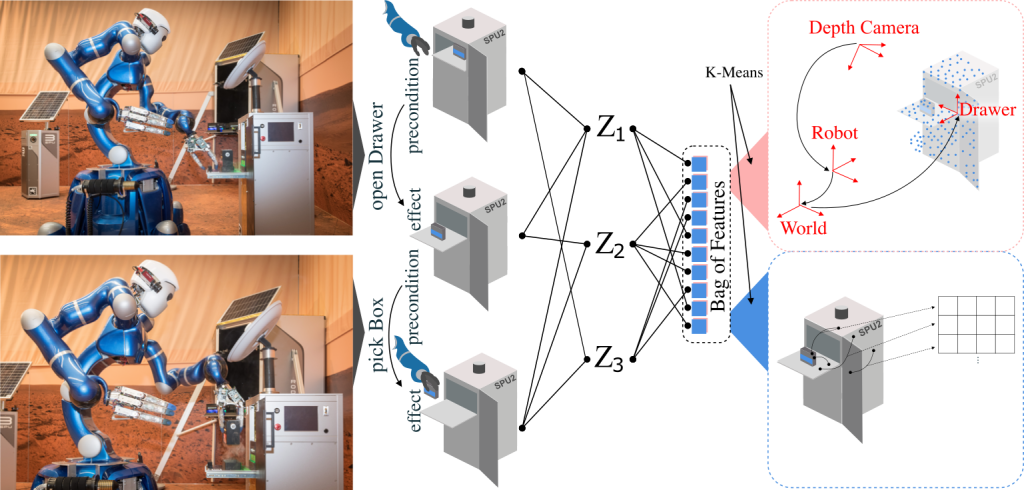

Abstract: In future Mars exploration scenarios, astronauts orbiting the planet will control robots on the surface with supervised autonomy to construct infrastructure necessary for human habitation. Symbol-based planning enables intuitive supervised teleoperation by presenting relevant action possibilities to the astronaut. While our initial analog experiments aboard the International Space Station (ISS) proved this scenario to be very effective, the complexity of the problem puts high demands on domain models. However, the symbols used in symbolic planning are error-prone as they are often hand-crafted and lack a mapping to actual sensor information. While this may lead to biased action definitions, the lack of feedback is even more critical. To overcome these issues, this paper explores the possibility of learning the mapping between multi-modal sensor information and high-level preconditions and effects of robot actions. To achieve this, we propose to utilize a Multi-modal Latent Dirichlet Allocation (MLDA) for unsupervised symbol emergence. The learned representation is used to identify domain-specific design flaws and assis in supervised autonomy robot operation by predicting action feasibility and assessing the execution outcome. The approach is evaluated in a realistic telerobotics experiment conducted with the humanoid robot Rollin’ Justin.